Let's process sound with Go

Disclaimer: I don’t consider any algorithms and API for working with sound and speech recognition. This article is about problems with audio processing and how to solve them with Go.

phono is an application framework for sound processing. It’s main purpose - to create a pipeline of different technologies to process sound in a way you need.

What does the pipeline do, using different technologies and why do we need another framework? We will figure that out now.

Whence the sound?

In 2018 sound became a standard way of interaction between human and technology. With the majority of IT-giants either already having voice-assistant, or are working on it right now. Voice control integrated into major operating systems and voice messages are a default feature of any modern messenger. There are around a thousand of start-ups working on natural language processing and around two hundred on speech-recognition.

Same story with music. It’s getting played from any device and sound recording is available for anyone who has a computer. Music software is developed by hundreds of companies and thousands of enthusiasts around the globe.

Common tasks

If you ever performed any sound-processing tasks, the following conditions should be familiar to you:

- Audio should be received from file, device, network, etc.

- Audio should be processed: add FX, encode, analyze, etc.

- Audio should be sent into file, device, network, etc.

- Data is transmitted in small buffers

It becomes a pipeline - there is a data stream that goes through several stages of processing.

Solutions

For clarity, let’s take a real life problem: we need to transform voice into text:

- Record audio with a device

- Remove noises

- Equalize

- Send signal to a voice-recognition API

As any other problem, this one has several solutions.

Brute force

Hardcore-developers wheel-inventors only. Record sound directly through a sound interface driver, write smart noise-suppressor and multi-track equalizer. This is very interesting, but you can forget about your original task for several months.

Time-consuming and very complex.

Normal way

The alternative is to use existing audio APIs. It’s possible to record audio with ASIO, CoreAudio, PortAudio, ALSA and others. Multiple standards of plugins are available for processing: AAX, VST2, VST3, AU.

A rich selection doesn’t mean that it’s possible to use everything. Usually the following limitations apply:

- Operating system. Not all APIs are available on all OSs. For example, AU is OS X native technology and available only there.

- Programming language. The majority of audio libraries are written in C or C++. In 1996 Steinberg released the first version of VST SDK, still the most popular plugin format. Today, you no longer have to write in C/C++: there are VST wrappers in Java, Python, C#, Rust and many others. Although the language remains a limitation, today people process sound even with Javascript.

- Functionality. If the problem is simple, there is no need to write a new application. The FFmpeg has a ton of features.

In this case the complexity depends on your choices. The worst-case scenario - you’ll have to deal with multiple libraries. And if you’re really unlucky, with complex abstractions and absolutely different interfaces.

What in the end?

We have to choose between very complicated and complicated:

- deal with several low-level APIs to invent our own wheels

- deal with several APIs and try to make them friends

It doesn’t matter which way you’ve chosen, the task always comes down to the pipeline. The technologies may differ, but the essence is unchanged. Again, the problem is instead of solving the problem, we have to invent a wheel build a pipeline.

But there’s an option.

phono

phono is created to solve common tasks - “receive, process and send” the sound. It utilizes a pipeline as the most natural abstraction. There is a post in the official Go blog. It describes a pipeline-pattern. The core idea of the pipeline-pattern is that there are several stages of data processing working independently and exchanging data through channels. That’s what is needed.

But, why Go?

At first, a lot of audio software and libraries are written in C. Go is known as its successor. On top of that, there is cgo and a big variety of bindings for existing audio APIs that we can take and use.

Second, in my opinion, Go is a good language. I don’t want to dive deep, but I note its concurrency possibilities. Channels and goroutines make pipeline implementation significantly easier.

Abstractions

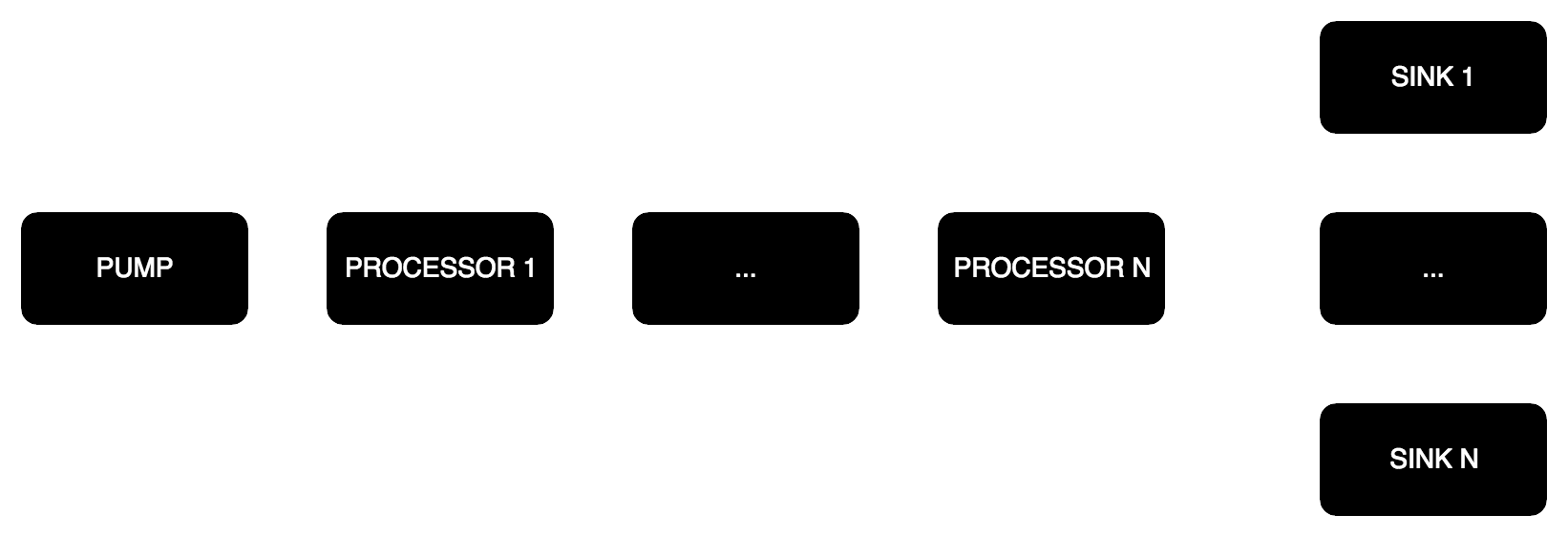

pipe.Pipe struct is the heart of phono - it implements the pipeline pattern. Just as in the blog example there are three stages defined:

pipe.Pump- receive sound, only output channelspipe.Processor- process sound, input and output channelspipe.Sink- send sound, only input channels

Data transfers in buffers within a pipe.Pipe. Rules which allow you to build a pipe:

- Single

pipe.Pump - Multiple

pipe.Processor, placed sequentially - Single or multiple

pipe.Sink, placed in parallel - All

pipe.Pipecomponents should have the same:- Buffer size

- Sample rate

- Number of channels

Minimal configuration is a Pump with single Sink, the rest is optional.

Let’s go through several examples.

Easy

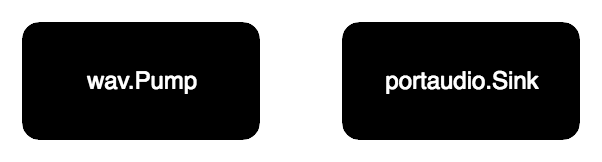

Problem: play a wav file.

Let’s express a problem in “receive, process, send” form:

- Receive audio from wav file

- Send audio to portaudio device

Audio is read and immediately played back.

First we create all elements of a pipeline: wav.Pump and portaudio.Sink and use them in a constructor pipe.New. Function p.Do(pipe.actionFn) error starts a pipeline and awaits until it’s done.

More difficult

Problem: cut wav file into samples, put them into track, save and play the result at the same time.

Sample is a small piece of audio and track is a sequence of samples. In order to sample the audio, we have to put it into memory first. We can use asset.Asset from phono/asset package to serve this purpose. Express the problem in standard steps:

- Receive audio from wav file

- Send audio to memory

Now we can make samples, add them to the track and finalize the solution:

- Receive audio from track

- Send audio to:

- wav file

- portaudio device

Again, there is no processing stage, but we have two pipelines now!

Compared to the previous example, there are two pipe.Pipe. First one transfers data into memory, so we can do the sampling. Second one has two sinks in the final stage: wav.Sink and portaudio.Sink. With this configuration, the sound is simultaneously saved to a wav file and played.

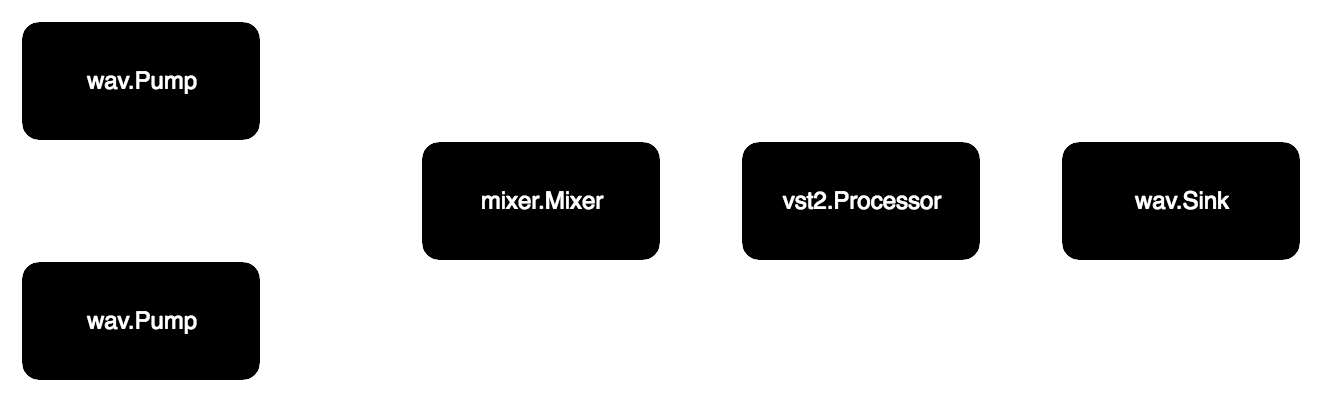

Even more difficult

Problem: read two wav files, mix them, process with vst2 plugin and save into a new wav file.

There is a simple mixer mixer.Mixer at phono/mixer package. It allows to send signals from multiple sources and receive a single one mixed. To achieve this, it implements both pipe.Sink and pipe.Pump at the same time.

Again, the problem consists of two small ones. First one looks like this:

- Receive audio from wav file

- Send audio to mixer

Second:

- Receive audio from mixer

- Process audio with plugin

- Send audio to wav file

Here we have three instances of pipe.Pipe, all connected with a mixer. Execution is started with p.Begin(pipe.actionFn) (pipe.State, error). In compare to p.Do(pipe.actionFn) error, it doesn’t block the call and just returns expected state. The state can be awaited with p.Wait(pipe.State) error.

What’s next?

I want phono to be a very convenient application framework. If there is a task with sound, you do not need to understand complex APIs and spend time studying the standards. All that you need is to build a pipeline with suitable elements and launch it.

In last six months the following packages built:

phono/wav- read/write wav filesphono/vst2- not completed VST2 SDK bindings, just open plug-ins and call it’s methods, not all structures are mappedphono/mixer- mixer, summarizes N signals, no balance and volumephono/asset- sampling buffersphono/track- sequential read of buffersphono/portaudio- playback audio, experimental

In addition to this list, there is a constantly growing backlog of new ideas, among which:

- Time measurement

- Mutable on-the-flight pipeline

- HTTP pump/sink

- Parameters automation

- Resampling-processor

- Balance and volume for mixer

- Real-time pump

- Synchronized pump for multiple tracks

- Full vst2

Topics for upcoming articles:

- lifecycle of

pipe.Pipe- it’s state is managed with finite state machine due to complex internal structure - how to write your own pipe stages

This is my first open-source project, so I would be happy to get any help and recommendations.